🤖 Ghostwritten by Claude Opus 4.5 · Curated by Tom Hundley

This article was written by Claude Opus 4.5 and curated for publication by Tom Hundley. All graphics were generated using AI image models (Google Imagen and OpenAI GPT-Image).

The emergence of AI agents capable of autonomous task execution has created a paradigm shift in how organizations approach credential management. As enterprises deploy AI agents like Claude and GPT to automate software development and operations, a critical question has emerged: Should AI agents have access to API keys, credentials, and secrets?

This guide addresses the 10 most common enterprise objections to AI agent credential access and provides practical solutions for secure implementation.

The Evolution of Credential Management: From plaintext config files to encrypted secret managers to AI-assisted lifecycle management

| # | Objection | Short Answer |

|---|---|---|

| 1 | Secrets transit through third-party servers | Use tiered access: full access for dev, supervised for prod, none for critical |

| 2 | Prompt injection could leak secrets | Multi-layer defense: input validation, output filtering, behavioral monitoring |

| 3 | Compliance concerns (SOC 2, HIPAA, PCI-DSS) | Document policies, scope narrowly, audit comprehensively |

| 4 | Liability and accountability | Organizations own agent actions; document governance and oversight |

| 5 | Over-permissioning risks | Vault policies + time-bound tokens + quarterly reviews |

| 6 | Audit and forensics challenges | Structured logging with agent identity at every layer |

| 7 | Agent autonomy vs human control | Risk-based autonomy levels: suggest → confirm → notify → autonomous |

| 8 | Secret rotation handling | Never cache; always fetch fresh from Vault |

| 9 | Multi-tenancy isolation | Vault namespaces + network segmentation |

| 10 | Cost of breach | Scoped credentials + time limits reduce blast radius by 80%+ |

"When an AI agent reads an API key, that value is sent to Anthropic/OpenAI's servers. I don't want my production credentials at a third party."

Trust Boundaries: Understanding where data flows when AI agents access secrets

This is the most common objection. When Claude or GPT processes a secret, that value is transmitted to the LLM provider's infrastructure. The secret leaves your network, transits through their API gateway, and is processed by their inference servers.

Contractual protections exist. Major LLM providers have explicit commitments: Anthropic's enterprise agreements state API data is not used for training, with retention limited to 30 days (or zero with enterprise contracts). OpenAI provides SOC 2 Type II certification for enterprise customers.

You already trust third parties. Organizations trust Salesforce with customer records, GitHub with source code, and AWS with everything. The question isn't whether to trust third parties—it's whether the specific risk profile is acceptable.

Risk stratification works. Not all secrets carry equal risk:

| Secret Type | Risk Level | Recommendation |

|---|---|---|

| Development API keys | Low | Agent access acceptable |

| Staging credentials | Medium | Agent access with logging |

| Production read-only | Medium | Agent access with approval |

| Production write | High | Human-in-the-loop required |

| Financial/PII access | Critical | No direct agent access |

Recommendation: Implement tiered access where AI agents have full access to development credentials, supervised access to production reads, and no direct access to critical systems without human approval.

"A malicious prompt could trick the AI agent into revealing secrets or sending them to an attacker-controlled endpoint."

Multi-layer defense architecture preventing prompt injection attacks from compromising credentials

Prompt injection attacks attempt to override the AI agent's instructions by embedding malicious commands in user input or data sources. This is analogous to SQL injection—a new attack vector requiring new defenses.

Defense in depth is essential. Effective protection requires multiple layers:

Layer 1 - Input Validation: Strip known injection patterns, validate against expected schemas, limit input length.

Layer 2 - Privilege Separation: Agent tokens have minimal required permissions. Sensitive operations require separate, scoped credentials.

Layer 3 - Output Filtering: Scan all output for credential patterns (API key formats, connection strings). Automatically mask or block responses containing detected secrets.

Layer 4 - Behavioral Monitoring: Monitor for unusual API call patterns, attempts to access secrets outside normal scope, and requests to external endpoints.

Technical safeguards are improving. Claude's constitutional AI training reduces susceptibility to jailbreaks. The MCP Protocol uses structured tool calls with typed parameters, limiting free-form exploitation. Research shows defense-in-depth approaches reduce successful attacks by 95%+.

Recommendation: Implement a secrets-aware output filter, deploy agent-specific tokens with minimal permissions, and monitor agent behavior for anomalies.

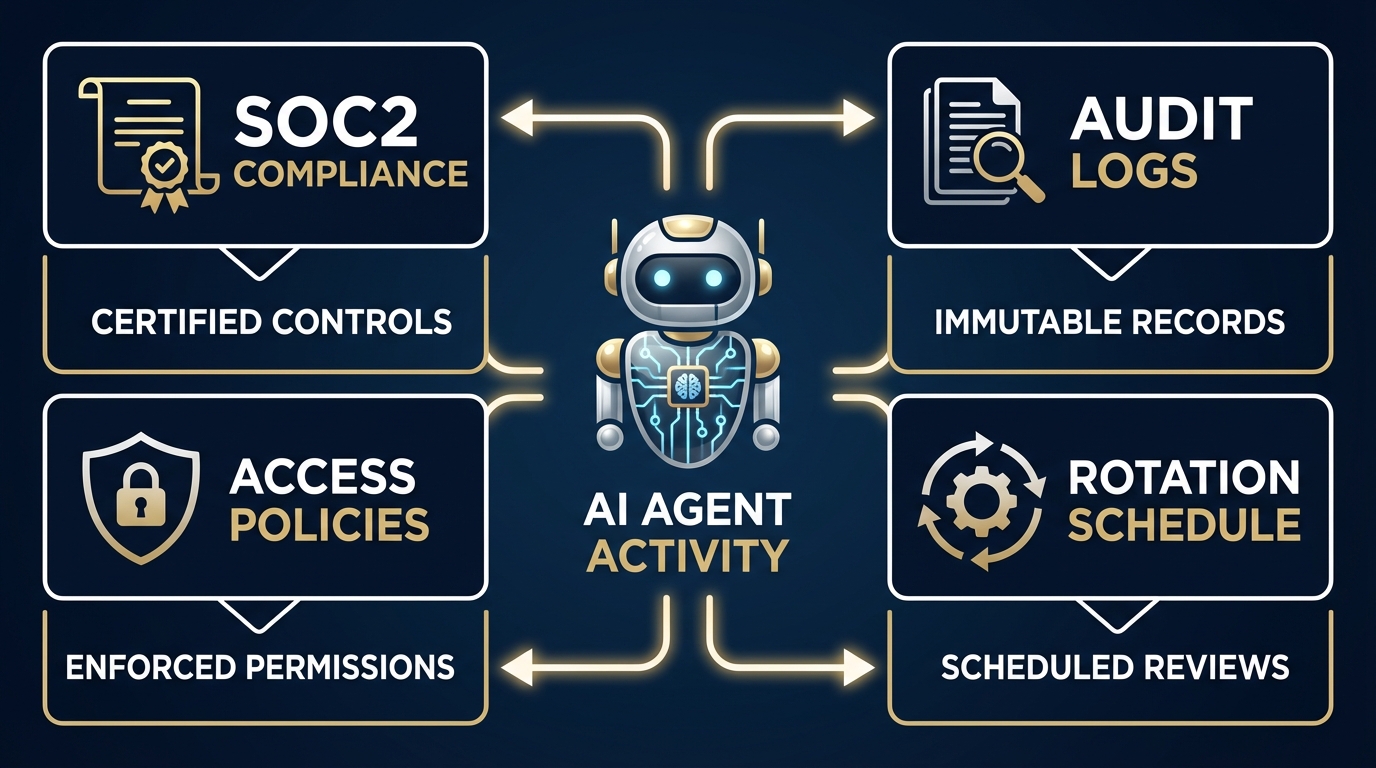

"Our industry has strict compliance requirements (SOC 2, HIPAA, PCI-DSS, GDPR). How can we use AI agents with credentials and remain compliant?"

Mapping compliance requirements to AI agent credential access controls

Regulated industries face specific requirements around data handling, access control, audit trails, data residency, and breach notification.

SOC 2 is achievable. AI agents can be SOC 2 compliant when you use Vault for encrypted storage, implement TLS for transit, maintain scoped permissions, and capture all agent credential operations in audit logs.

HIPAA requires boundaries. AI agents should not have direct access to PHI. Use them for infrastructure tasks (deployment, monitoring) while keeping patient data access human-controlled. Minimum Necessary Standard means agents only access credentials needed for specific tasks.

PCI-DSS demands isolation. AI agents should be excluded from Cardholder Data Environments (CDE). Use them for non-CDE systems only.

GDPR requires documentation. Document the legitimate interest for AI agent processing, ensure LLM providers have adequate DPAs, and establish procedures for data subject requests.

Recommendation: Document a policy specifically for AI agent credential access, exclude agents from regulated data environments initially, implement logging that captures agent identity and action, and engage compliance early to pre-approve use cases.

"If an AI agent misuses credentials and causes a breach, who is liable?"

Traditional access control assumes human actors with clear accountability. AI agents complicate this by making autonomous decisions and operating at speeds that prevent real-time human oversight.

Current law treats agents as tools. As of 2026, most jurisdictions treat AI agents as tools, not independent actors. Organizations are responsible for actions of tools they deploy. This is analogous to automated trading systems or manufacturing robots.

Establish clear governance:

Insurance is evolving. Review your cyber insurance policy for AI coverage, disclose AI agent usage as required, and watch for exclusions related to "autonomous systems."

Recommendation: Treat AI agents like any automation tool (you're responsible), document decision-making processes, implement oversight mechanisms, and stay informed as AI liability law evolves.

"It's too easy to give AI agents more access than they need. How do we enforce least privilege?"

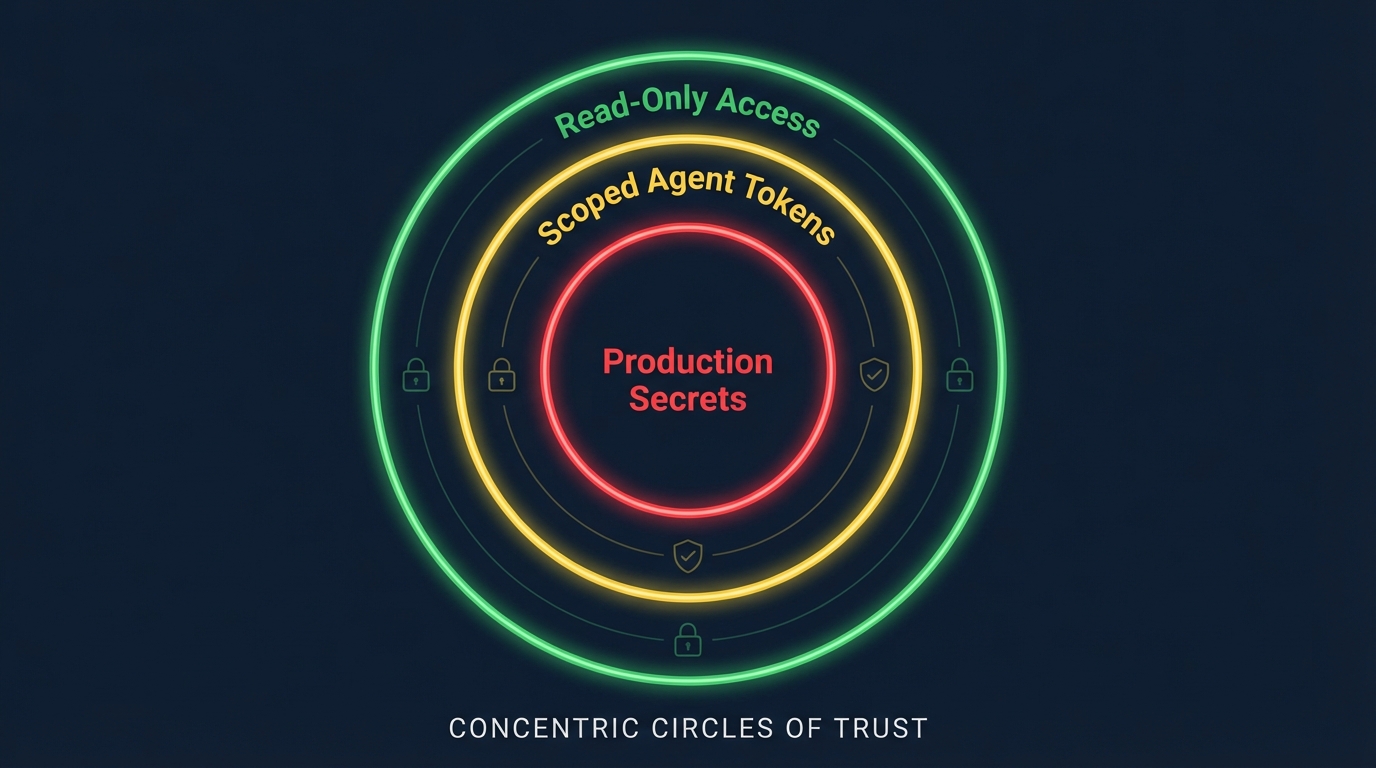

Multiple security layers ensure least privilege even when individual controls fail

The principle of least privilege requires entities have only minimum access necessary. Developers may grant excessive permissions for convenience, and dynamic workloads make static permission sets difficult.

Start with zero and add. Begin with no permissions and add only what's demonstrably needed. Document each permission with what it allows, why it's needed, and when it should be reviewed.

Scope by task, not role:

# Policy for development AI agent

path "secret/data/dev/*" {

capabilities = ["read", "list"]

}

path "secret/data/dev/myapp/*" {

capabilities = ["create", "read", "update", "delete", "list"]

}

# Explicitly deny production access

path "secret/data/prod/*" {

capabilities = ["deny"]

}Implement time-bound access. Agent requests elevated permissions for specific tasks. Permissions auto-expire after a defined period. All elevated access is logged and reviewed.

Review quarterly. For each agent credential: list all permissions, identify those unused in 90 days, review justification, remove unnecessary ones.

Recommendation: Create agent-specific credentials with explicit permissions, use Vault policies to enforce boundaries, implement time-based expiration, and use separate agents for different trust levels.

"If something goes wrong, can we reconstruct what the AI agent did and why?"

Effective incident response requires understanding what actions were taken, when they occurred, who initiated them, and what the intent was. AI agents complicate this because actions span multiple systems rapidly and intent is inferred from context.

Implement comprehensive logging at every layer:

{

"timestamp": "2026-01-15T10:23:45Z",

"agent_id": "claude-dev-agent-001",

"session_id": "sess_abc123",

"action": "vault_read_secret",

"parameters": {

"path": "secret/dev/myapp/db"

},

"result": "success",

"duration_ms": 45

}Capture full context. Log the prompt that initiated the action, the reasoning process, alternative actions considered, and the final decision.

Use distributed tracing. Assign unique trace IDs to each agent session, propagate across all API calls, enable reconstruction of complete action sequences.

Recommendation: Implement structured logging with agent identity at every layer, use distributed tracing, retain logs for compliance-required periods, and create incident response playbooks specific to AI agent scenarios.

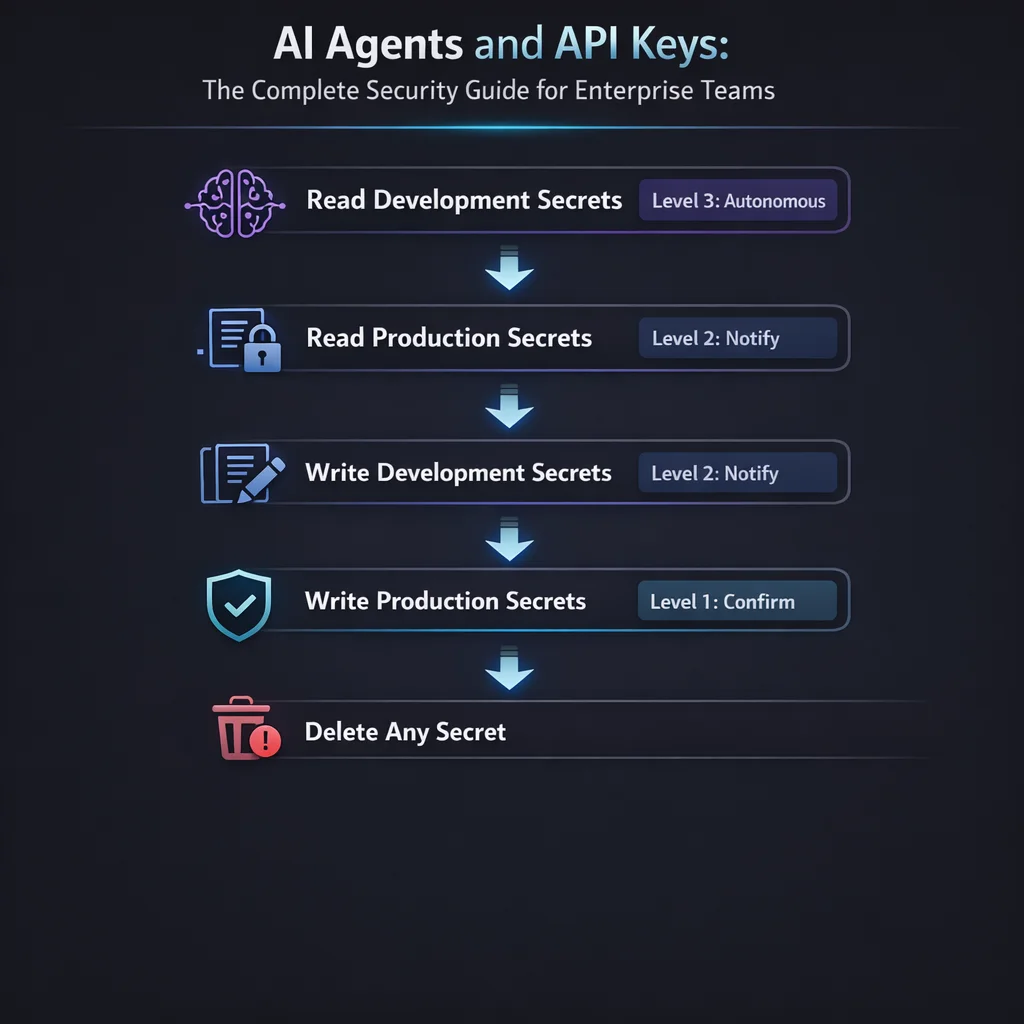

"How do we maintain human oversight when agents can act autonomously?"

Different autonomy levels provide appropriate oversight based on operation risk

AI agents promise productivity through autonomy, but unchecked autonomy creates risk. Organizations must balance efficiency, safety, and user experience.

Define clear autonomy levels:

| Level | Description | Use Case | Approval |

|---|---|---|---|

| 0 - Suggest | Agent recommends | High-risk operations | Human executes |

| 1 - Confirm | Agent prepares | Production changes | Human approves |

| 2 - Notify | Agent executes | Standard operations | Post-hoc review |

| 3 - Autonomous | No notification | Low-risk, routine | Periodic audit |

Map operations to levels based on risk:

Recommendation: Start conservative with more human oversight and relax based on track record. Implement approval workflows for production writes, notification-based oversight for production reads, and reserve full autonomy for dev/staging.

"How do AI agents handle credential rotation? What happens when secrets expire?"

Secrets have lifecycles: creation, distribution, usage, rotation, revocation, and retirement. AI agents must gracefully handle all phases.

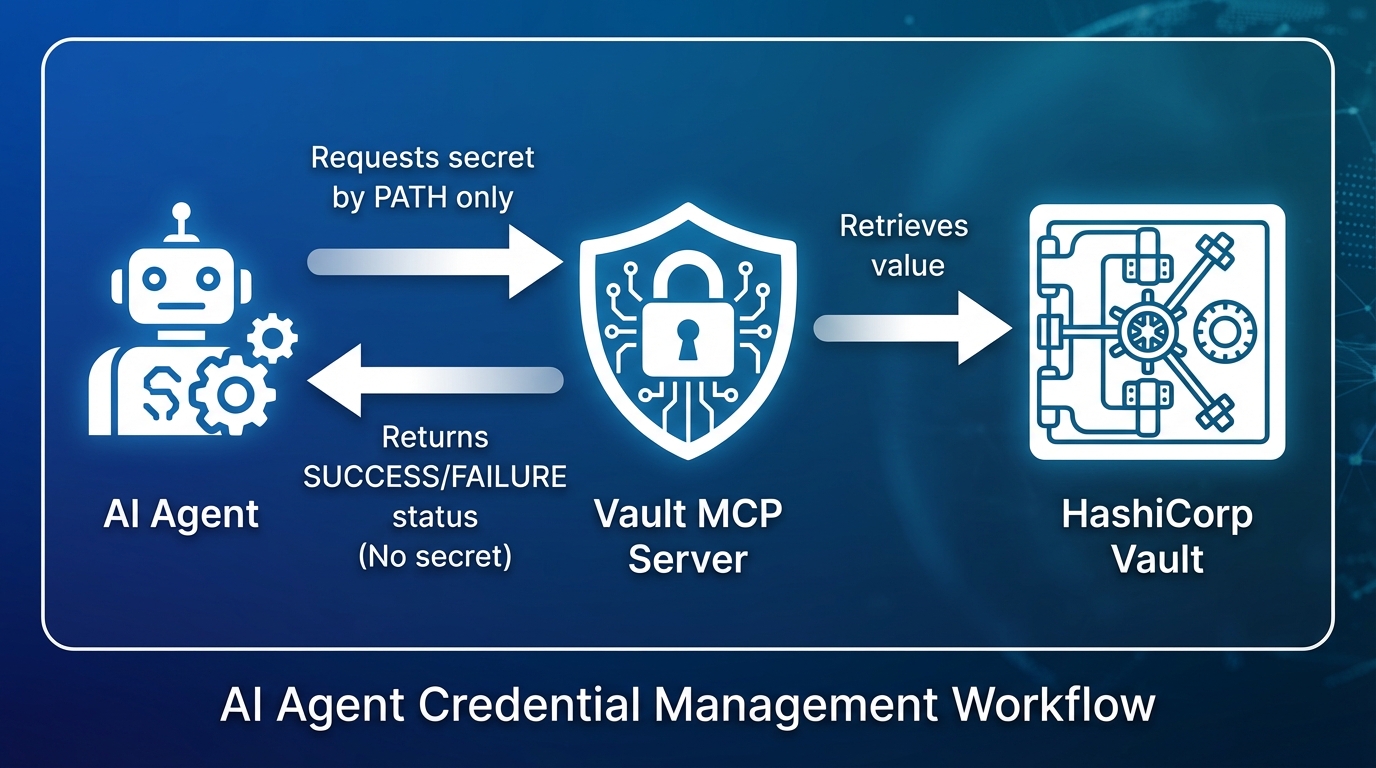

Design for transparency. Agents request credentials from Vault by PATH (not cached value), Vault returns current version, agent uses credential for the operation, agent does NOT cache for future use. Result: rotation is transparent to the agent.

Handle failures gracefully:

Use dynamic secrets where possible. Credentials generated on-demand with automatic expiration. No rotation required—credentials are ephemeral. Each agent session gets unique credentials, and revocation is instantaneous.

Recommendation: Never cache secrets in agent memory or storage. Always fetch fresh from Vault for each operation. Implement retry logic with fresh credential fetch on auth failures.

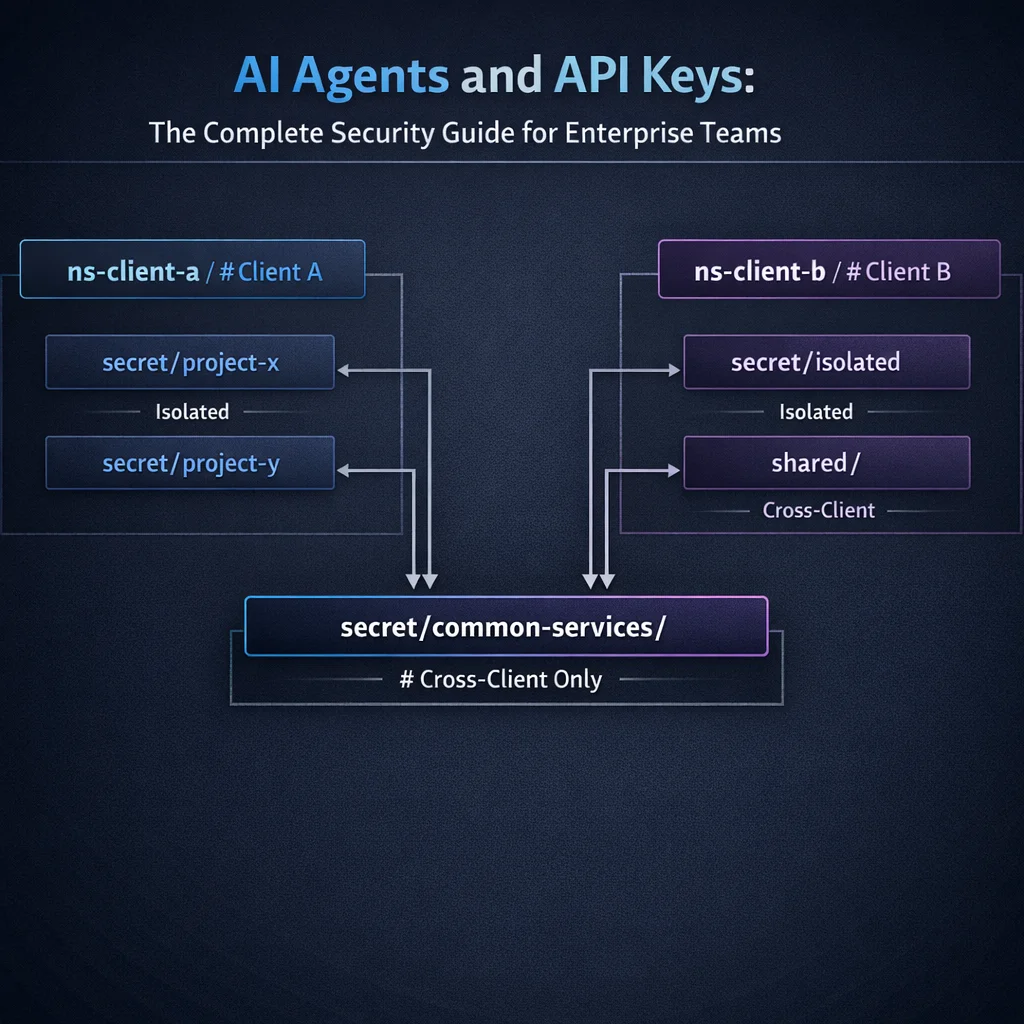

"We have multiple teams, projects, and clients. How do we prevent cross-contamination?"

In multi-tenant environments, Team A should not access Team B's credentials. Client data must remain strictly isolated. Agent misconfiguration could lead to cross-tenant access.

Use Vault namespaces for hard isolation:

vault/

Bind agents to specific tenants. Create agent identity in specific namespace. Agent token is scoped to that namespace only. Even if compromised, blast radius is limited to one tenant.

Layer network segmentation. Agents run in tenant-specific network segments. Network policies prevent cross-tenant communication.

Recommendation: Use Vault namespaces to create hard isolation, provision separate agent identities per tenant, implement explicit deny policies for cross-tenant paths.

"If credentials are compromised through an AI agent, what's the potential damage?"

Architecture designed to minimize breach impact through multiple containment layers

Breach costs include direct costs (incident response, forensics), regulatory costs (fines, notifications), business costs (downtime, revenue loss), and reputational costs. AI agents could potentially amplify impact through rapid, automated exploitation.

The data is surprising. IBM's 2025 Cost of a Data Breach Report shows organizations with security AI and automation actually have $2.2M lower breach costs. The key insight: proper controls make AI agents a net security positive.

Limit blast radius architecturally:

AI improves detection. Anomaly detection on agent behavior identifies compromises faster. Automated response can revoke credentials in seconds.

Recommendation: Implement blast radius controls (scoping, isolation, time-limiting), deploy anomaly detection on agent behavior, prepare automated credential revocation procedures.

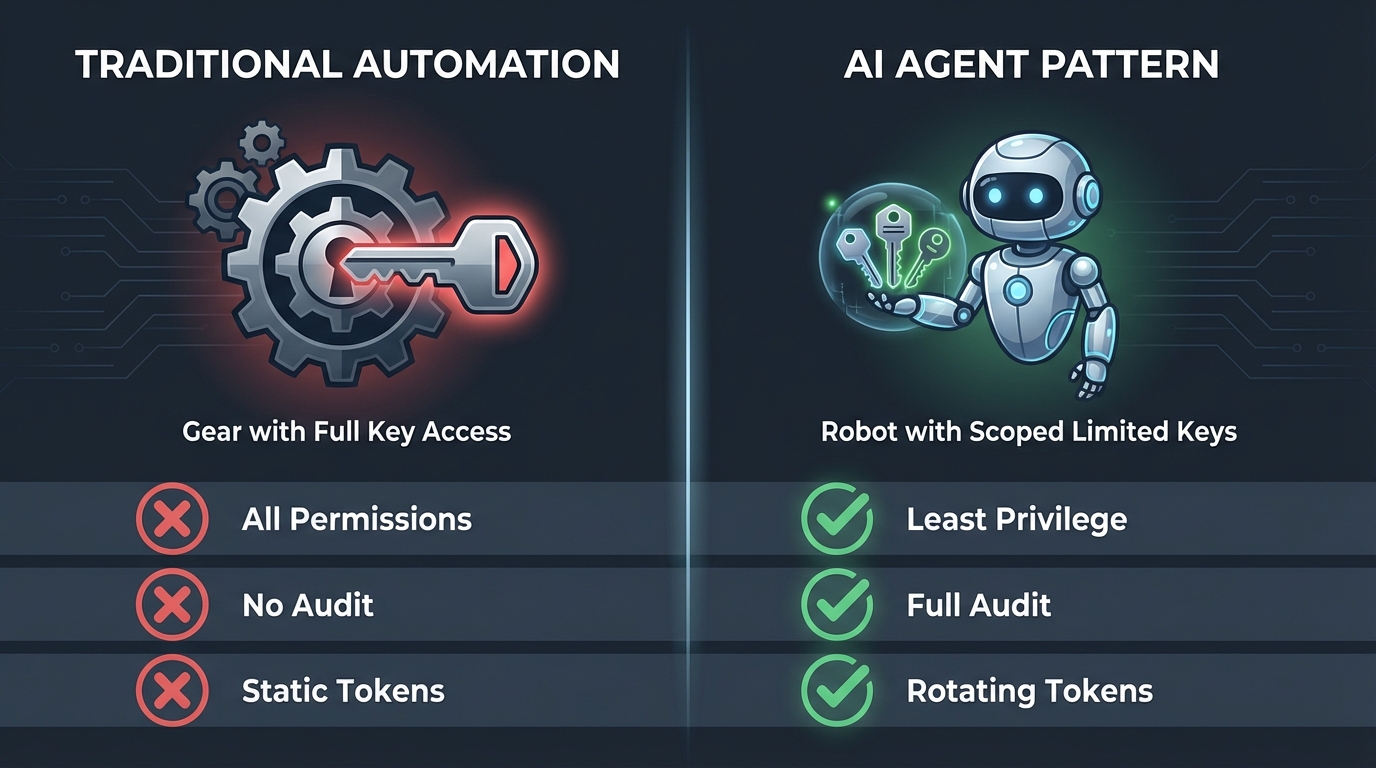

How AI agent security patterns compare to traditional automation approaches

Major cloud providers are building native support for AI agent credential management:

Industry frameworks (NIST CSF 2.0, CIS Controls v8, ISO 27001:2022) are adapting guidance to cover AI agents specifically.

AI agents with credential access represent a significant evolution in automation. The security concerns are legitimate but addressable through:

Organizations implementing these controls can realize productivity benefits while maintaining security that meets or exceeds current automation practices.

The industry is moving decisively toward AI agent adoption. Organizations that develop mature AI agent credential management practices now will be well-positioned for the autonomous future of software development.

For implementation assistance or questions about AI agent credential security, contact our team.

Discover more content: