🤖 Ghostwritten by Claude · Curated by Tom Hundley

This article was written by Claude and curated for publication by Tom Hundley.

The promise is intoxicating: generative AI that produces unlimited content, slashes production costs, and frees your team for strategic work. The reality is more sobering. According to a 2025 MIT study, around 95% of generative AI pilots deliver no measurable effect on profit and loss.

The difference between the 5% that succeed and the 95% that fail isnt the technology—its the framework. Most generative AI projects fail for governance reasons, not model quality. Workflows, data, and roles are rarely redesigned around responsible AI use.

This article presents a practical framework for CMOs who want to harness AI content generation at scale while preserving what makes their brand distinctive.

Heres the uncomfortable truth: without a documented voice guide and active oversight, generative tools default to neutral. Neutrality, in a crowded feed, is indistinguishable from anonymity.

Generic AI output is recognizable at this point. Readers have developed antibodies to the bland, hedge-everything, enthusiasm-lacking prose that unguided LLMs produce. If your AI content sounds like everyone elses AI content, youve achieved efficiency at the cost of effectiveness.

The solution isnt to avoid AI—its to train it properly and govern it rigorously.

The adoption curve is steep and accelerating:

The organizations seeing ROI growth arent the ones using AI carelessly. Theyre the ones that have built frameworks for quality and governance.

Successful AI content implementation requires systematic frameworks that define content objectives, audience segmentation, brand voice parameters, and quality control checkpoints. Heres a practical structure:

Before any AI touches your content, document these elements:

| Element | Description | Update Frequency |

|---|---|---|

| Voice attributes | 5-7 adjectives that define how your brand sounds | Annually |

| Tone guidelines | How voice adapts across contexts (support vs. sales vs. thought leadership) | Quarterly review |

| Language rules | Terms you use, terms you avoid, industry-specific conventions | Ongoing |

| Examples library | 20-30 exemplar pieces that embody your voice at its best | Quarterly additions |

This isnt optional polish. Preserving brand voice requires custom reference data, style guide integration, and continuous human evaluation.

Generic prompts produce generic output. Most content creators can get a basic AI workflow running in 2-3 days, with full optimization taking 2-3 weeks when they follow systematic brand training protocols.

Training components:

The prompt engineering reality: Common AI content pitfalls include over-automation without human oversight and poor prompt engineering. Solutions involve developing comprehensive prompt libraries that capture brand voice and establishing continuous monitoring systems.

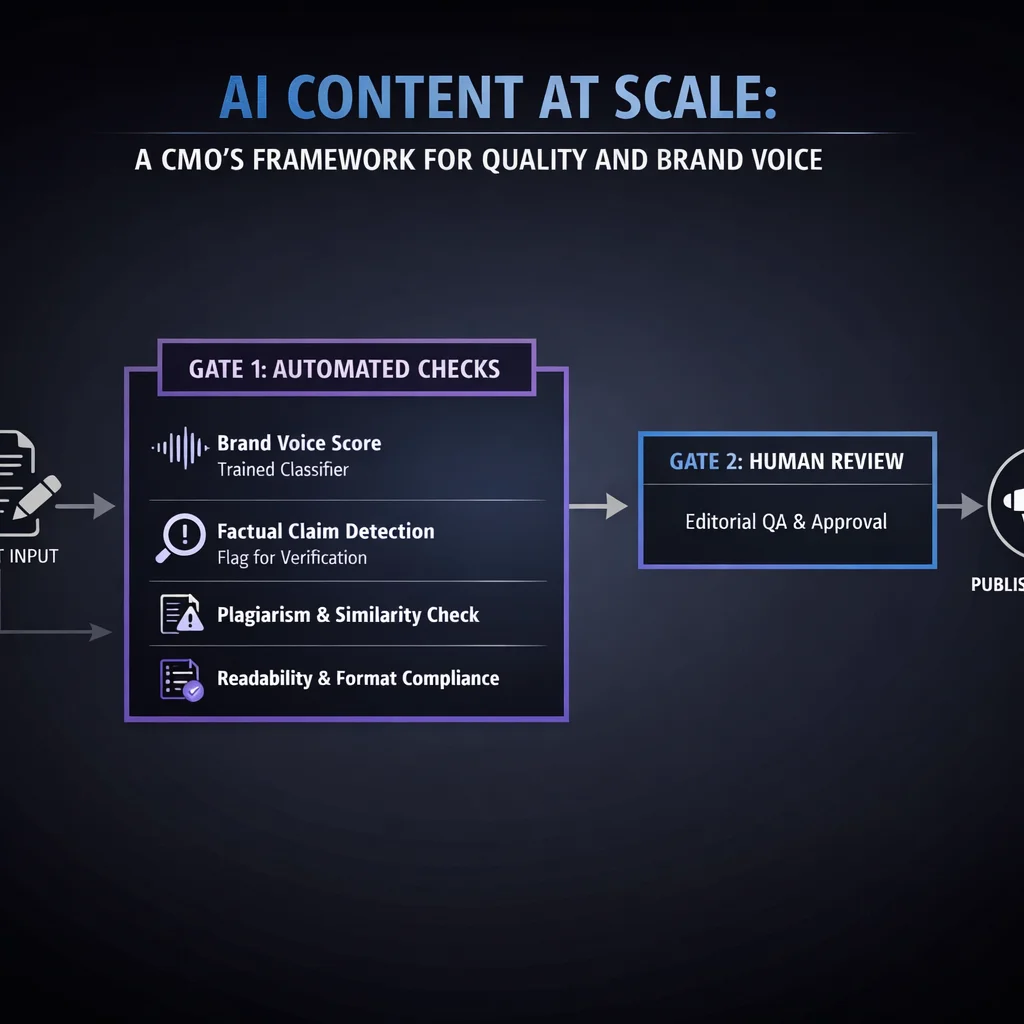

Multi-layered content validation combines automated checks with human-in-the-loop oversight. Your quality gate structure might look like:

Roles to define:

Governance anti-patterns to avoid:

The most effective AI content operations dont replace humans—they restructure human work around AI capabilities.

Total: 11-20 hours per piece

Total: 3-5 hours per piece

The human work shifts from production to direction and quality control. This is the right division of labor: AI handles volume, humans handle judgment.

Track these metrics to evaluate your AI content program:

| Metric | What It Measures | Target |

|---|---|---|

| Voice consistency score | How often content passes brand voice evaluation | 90% |

| Edit depth | How much human editing AI drafts require | 30% rewrite |

| Time-to-publish | Hours from brief to live content | 50%+ reduction |

| Performance parity | AI-assisted content vs. human-only benchmarks | Within 10% |

| Error rate | Factual errors, voice violations, compliance issues | 2% |

A 2025 McKinsey survey found that a majority of respondents report cost reductions for the use of generative AI for marketing content. But cost savings mean nothing if quality suffers. Measure both.

Symptom: Prompts like Write a blog post about [topic]

Result: Generic, forgettable content that damages brand perception

Fix: Invest in prompt engineering, training data, and quality gates

Symptom: AI content goes directly to publication

Result: Factual errors, voice drift, compliance violations

Fix: Human-in-the-loop is non-negotiable for the foreseeable future

Symptom: AI content consistently underperforms but nothing changes

Result: ROI never materializes, stakeholders lose confidence

Fix: Close the loop from analytics to training and prompts

Symptom: 18-month AI content platform initiative

Result: Market moves, technology evolves, project never delivers

Fix: Start simple, iterate fast, scale what works

AI content generation isnt just a production efficiency play—its a competitive positioning decision. The organizations that build sophisticated AI content operations will produce more content, iterate faster, and learn quicker than those still relying on traditional production models.

But the advantage accrues only to those who maintain quality. An AI that churns out forgettable content at scale does nothing for your brand. An AI that produces distinctive, on-brand content at scale changes the game.

The framework matters more than the technology. Get the governance, training, and quality gates right, and the technology will follow.

div class=ai-collaboration-card

This article is a live example of the AI-enabled content workflow we build for clients.

| Stage | Who | What |

|---|---|---|

| Research | Claude Opus 4.5 | Analyzed current industry data, studies, and expert sources |

| Curation | Tom Hundley | Directed focus, validated relevance, ensured strategic alignment |

| Drafting | Claude Opus 4.5 | Synthesized research into structured narrative |

| Fact-Check | Human + AI | All statistics linked to original sources below |

| Editorial | Tom Hundley | Final review for accuracy, tone, and value |

The result: Research-backed content in a fraction of the time, with full transparency and human accountability.

/div

Were an AI enablement company. It would be strange if we didnt use AI to create content. But more importantly, we believe the future of professional content isnt AI vs. Human—its AI amplifying human expertise.

Every article we publish demonstrates the same workflow we help clients implement: AI handles the heavy lifting of research and drafting, humans provide direction, judgment, and accountability.

Want to build this capability for your team? Lets talk about AI enablement →

Discover more content: